Subject-driven text-to-image generation via W Chen is a groundbreaking method in artificial intelligence that allows computers to create images based on specific descriptions. Instead of using traditional fine-tuning techniques, this approach utilizes in-context learning, making it more efficient and adaptable. W Chen’s innovative method empowers AI systems to generate high-quality, contextually accurate images based on just a few examples or instructions. This has huge potential in areas like creative content generation, marketing, and even educational tools.

The beauty of subject-driven text-to-image generation via W Chen lies in its ability to adapt to a wide range of subjects without the need for extensive retraining. By leveraging in-context learning, the system is able to learn and apply new concepts on the fly, which opens up exciting possibilities for content creators and developers. In this blog post, we’ll dive deeper into how this technology works and why it’s creating such a buzz in the AI community.

What is Subject-Driven Text-to-Image Generation via W Chen

Subject-driven text-to-image generation via W Chen is a new and exciting way that computers can create images from text descriptions. It’s like when you describe something in words, and the computer turns those words into a picture. Imagine telling a computer, “A happy dog playing in the park,” and the computer creates an image that matches your description perfectly. This is what W Chen’s method helps to do, but it’s done in a smarter and faster way

The biggest thing that makes W Chen’s method different is how it learns. Unlike other systems that need lots of training with big data, W Chen uses something called “in-context learning.” This means that the computer can learn quickly and make accurate images based on just a few examples. It’s like showing the computer a few pictures of dogs and saying, “This is what a dog looks like,” and then asking it to make new pictures of dogs from your description. The computer doesn’t need to be trained for months, making this process much faster.

This method is exciting for artists, designers, and anyone who needs to create images quickly. Instead of spending lots of time drawing or finding the right images, you can just write a description, and the computer will do the hard work of creating it. W Chen’s technology has the potential to change how we create content and design things, making it easier and faster for anyone to bring their ideas to life.

How W Chen’s In-Context Learning Revolutionizes Text-to-Image AI

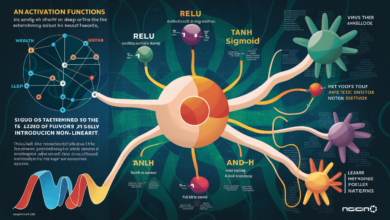

W Chen’s in-context learning method changes the way text-to-image generation works. Traditional text-to-image systems usually require a lot of data to train and build accurate models. These systems learn from big datasets of images and descriptions, but they can’t easily adapt to new or unexpected situations. In contrast, W Chen’s approach uses fewer examples and learns on the spot, making it more flexible and faster.

The key idea behind in-context learning is that the computer can take a few examples from a specific subject and then use them to generate new images. For example, if you give the system just a few pictures of cats and tell it that these are cats, the system can then create new cat images based on the description you provide. This saves time and makes it easier to teach the system new things without needing big training sets.

Another important benefit of in-context learning is its ability to understand context. The computer doesn’t just generate random images; it takes into account the details you give. For instance, if you ask for “a cat sitting on a chair,” it will generate that specific scene, considering both the cat and the chair, and how they fit together in the real world. This ability to understand and generate images based on context is a huge step forward in AI image generation.

The Power of Context: Understanding Subject-Driven Text-to-Image Generation

The power of subject-driven text-to-image generation lies in its ability to understand and use the subject you describe. With traditional systems, the computer can make images based on broad concepts but might struggle when it comes to specific details. W Chen’s method solves this problem by using subject-driven learning, which means it focuses on the exact details you give and adapts to them.

When you tell the system what you want, it doesn’t just guess the general idea. For example, if you ask for a “red apple on a wooden table,” the system will understand the exact color of the apple and the texture of the wooden table. It’s more precise than other systems, which might just generate any apple or table, without considering the specific details you described.

This technology is especially useful for businesses and creators who need images that match a very specific vision. Whether you’re designing a new product or creating educational materials, having a system that can accurately generate images from text can save a lot of time and effort. Subject-driven text-to-image generation allows for precise, on-demand visuals that are exactly what you need.

Applications of Subject-Driven Text-to-Image Generation via W Chen in Various Industries

Subject-driven text-to-image generation via W Chen is not just a fun tool for tech enthusiasts; it’s also useful in many industries. This technology can help businesses, designers, educators, and content creators improve their work by quickly generating images based on descriptions. Here are some of the industries where this technology can be most helpful:

Marketing & Advertising: Creating ads with images that match the specific message or theme is easier than ever. Businesses can use this technology to generate custom images for campaigns without needing expensive photoshoots.

Education: Teachers can use text-to-image generation to create visual aids for lessons. Instead of searching for images online, educators can simply describe the scene or concept, and the AI will create it for them.

Design & Art: Artists can use this tool to bring their ideas to life more quickly. Whether it’s graphic design, fashion design, or product development, having a fast way to generate images based on descriptions opens up many creative possibilities.

Gaming & Animation: Game developers and animators can use text-to-image generation for creating environments, characters, and scenes based on story descriptions. This can speed up the production process and help bring game worlds to life.

By applying this technology across various industries, W Chen’s approach helps businesses save time, reduce costs, and improve creativity. The potential for this tool to transform how industries work is huge, and it’s only just getting started.

Challenges and Opportunities in Subject-Driven Text-to-Image Generation via W Chen

While subject-driven text-to-image generation via W Chen offers many exciting benefits, there are also challenges to consider. One of the biggest challenges is ensuring that the generated images are always accurate and of high quality. While the system works well with many subjects, it may sometimes struggle with complex or highly detailed descriptions. For example, generating a highly detailed scene with multiple objects might lead to some inaccuracies or inconsistencies.

Another challenge is the need for continuous improvements in the AI system. As more data and examples are used, the model can get better over time, but it still needs updates to stay relevant. Just like any AI technology, it has limitations that need to be worked on for more advanced uses.

Despite these challenges, there are many opportunities for growth. As the technology improves, it can be used for even more specific and detailed applications. With the help of better training and development, the system can become more reliable and adaptable for a wider range of industries. This means that over time, the opportunities for using subject-driven text-to-image generation via W Chen will only continue to grow.

Conclusion

Subject-driven text-to-image generation via W Chen is changing the way we create and think about images. With its ability to understand context and adapt to different subjects, it is a powerful tool for anyone who needs to create images quickly and accurately. From businesses and marketers to educators and artists, this technology is helping people bring their ideas to life more efficiently.

As AI continues to advance, the possibilities for subject-driven text-to-image generation are endless. The technology will only get better over time, allowing for even more detailed and creative images based on simple text descriptions. It’s an exciting time for the world of AI, and W Chen’s approach is leading the way in making image generation smarter, faster, and more accessible.

FAQs

Q: What is subject-driven text-to-image generation via W Chen

A: It is a method where computers create images from text descriptions using a new learning technique called in-context learning, which is faster and more adaptable.

Q: How does W Chen’s method work

A: W Chen’s system uses a few examples to teach the computer and then generates images based on the context of the text you provide.

Q: What industries can benefit from this technology

A: Many industries, including marketing, education, design, and gaming, can use this technology to create images quickly and efficiently.

Q: What are the challenges of this technology

A: Some challenges include ensuring the generated images are always accurate and improving the system to handle more complex descriptions.

Q: Will this technology improve over time

A: Yes, as more data is used and the system is developed, the technology will continue to improve and become more reliable and detailed.